Telerobotics FAQ

thinking through an underrated future technology

Recently, some of the building blocks for systems that will enable individuals to virtually transport themselves and perform physical tasks remotely via robots have been coming together. By making people able to travel at the speed of the internet, and by abstracting human labour from the constraints of the human embodiment, telerobotics systems have the potential to upend many areas: travel, construction, manufacturing, laboratories, and more.

What is telerobotics?

Telerobotics is the set of technologies that allows a person to feel as if they are present in the world in another location, while simultaneously enabling them to affect the world in that location.

Why should I care about telerobotics?

If telerobotics happens in a big way, it will make the physical world location-independent.

Economically, this would have huge implications by making labour able to travel across borders at the speed of the internet. Construction workers could work remotely from halfway across the world just as easily as computer programmers.

In terms of leisure, it would allow travel to anywhere in the blink of an eye. Travel may shift from being plane-based to internet-based.

It could expand the limits of human physical abilities by enabling superhuman strength, faster reflexes, or any feasible extension of the human form when imbued with robotic components.

What is the difference between telepresence and teleoperation?

Teleoperation is the operation of a robot at a distance. This is used in a wide variety of industries right now - operating cranes, drones, and so on.

Telepresence is the subset that enables the user to feel as if they have been virtually transported to a new location, while at the same time giving them the freedom to move around and interact with the world. It requires much more general technology to be built, as what is being done is defined by the user's desire to act rather than the specific context of use.

In general, the domains that are likely to benefit from general-purpose telerobotics as opposed to telepresence are more unstructured and closer to human scale.

What are some important applications for telepresence?

There are a huge number of possibilities both economic and non-economic for the technology; I will attempt to elucidate a few here.

Travel - If telepresence robots get good enough, the need to travel could be eliminated or substituted. Why travel to Japan when you can walk around Tokyo from your living room in Toronto?

This one cuts both ways, by the way. If you want an Italian dinner as part of your telepresence tour of Rome? Have the chef make it in your house via telepresence.

Business travel could also benefit from this. Things like factory tours or office tours or site inspections will be able to be automated.

Construction is notoriously hard to automate due to the diversity in the way that buildings are assembled, and the difficulty of automating physical interaction in many of the situations they encounter. Telerobotics could imbue construction machines with the ability to operate such hard-to-model situations by bringing human judgement and dexterity as required. Construction telerobots would have numerous benefits over humans:

When there are no humans on site, safety requirements during construction can be reduced, bringing down building costs.

Workers would not have to travel to construction sites, saving time

Workers could be pulled from a larger pool of labour around the world, driving costs in these sectors towards the median (hopefully accelerating progress in the physical world and helping to combat cost disease).

Maintenance and Manufacturing - to the extent that a) developed countries want to bring manufacturing back onshore, b) that manufacturing involves labour which is prohibitively expensive in their jurisdictions, and c) those processes are difficult to impossible to automate, telerobotics provides a solution by allowing workers from locations where it is economically viable to remote-in to onshore factories from offshore.

There is also a long tail of cases where maintenance is required and either something has to be shipped to a manufacturer or a maintenance engineer has to go on site to repair something. These situations could be resolved by having a telerobot locally operated by a manufacturer's technician remotely.

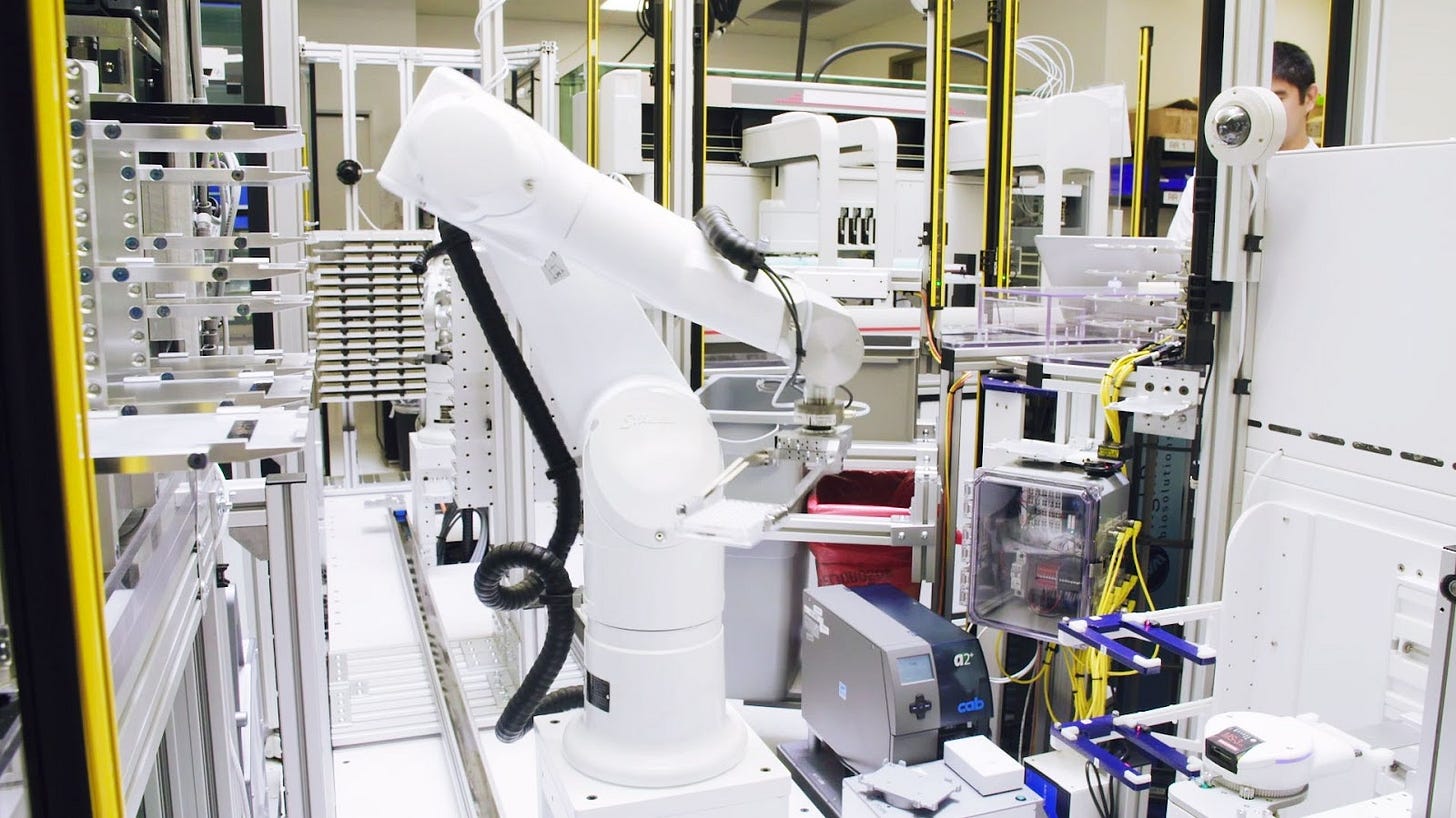

Scientific research - there is a tremendous amount of lab work that is still not automated and requires people to be there. However, labs are unevenly distributed around the world, expensive equipment is often not used efficiently, etc. Improving access and improving utilisation of such equipment could allow the use of all lab hardware in the same way raw compute is used today - seamlessly timeshared and available to all researchers for a fee.

Elderly care - While on the upper end of requirements for safety, costs of in-home care could be reduced hugely with the availability of fractional time on a robot with similar capabilities to a human. Contrarily, telerobotics could also enable previously infirm or physically impaired people to operate in the physical world at a normal level of capacity.

What previous efforts have there been? Why did they fail?

The basic idea of telerobotics is obvious, and there have been various efforts towards creating such systems going back to the mid 20th century. However, nobody has built a full-stack solution that gives a person controlling a robotic platform a sense of presence and the capability to act in spaces designed for humans.

Earlier efforts failed for two reasons: lack of technology, and tailoring the technology towards specific problems without generality in mind. The technology for enabling technologies such as long-distance high bandwidth video IO, legged robotics, or virtual reality has not existed since recently.

For example, some recent efforts include "iPads on sticks", pioneered by such companies as Double Robotics and Anybots:

These failed because of the specificity of the use case, and compared to using general-purpose tablets and smartphones the tech was expensive.

There have also been projects trying to build systems with off-the-shelf components or adapting existing systems, such as The Centauro Project or Sanctuary AI.

Ben Reinhardt raises interesting questions about whether telepresence can be developed in a commercial or academic context. In the former the urge to develop immediately commercially useful systems drives projects towards domain-specific solutions. While in academia, incentives often drive labs towards working on small pieces of the problem. In both cases, the proximal milestone is often a demo which, abstracted from a serious context of use, results in systems that seem good when using them for ten minutes but fail upon contact with actual problems.

Why do we need robotic telepresence for travel and meetings when we have Zoom?

Video conferencing technologies are limited in a variety of ways. They don't give the remote user any ability to move around in the world, creating a dependence on the local user. There is also the burden of continuous undivided attention staring at someone through a webcam.

Perhaps VR will solve this need for most business meetings. But people want to communicate in a whole manner of different settings beyond the (virtual) boardroom, whether it be going on a factory tour, site inspection, or during a walk in a physical space.

Why do we need robotic telepresence when we have robot autonomy?

Teleoperation has taken a back seat to full autonomy in robotics as it is often seen by roboticists as a less 'clean' solution. But in many cases, full physical automation is currently far away (construction), and in others it is a category error (you can't automate the experience of a site tour).

The solution is robotic telepresence, bringing many of the benefits of bits (travel at the speed of light, independent of location, equitable access) to the world of atoms (travel at the speed of a ship or the cost of a plane fair; location matters).

What about AGI?

If AGI (which for the purposes of the question I take to include physical intelligence) actually happens, then yes telerobotics as a way to augment labour becomes irrelevant, as presumably at that point it will be taken over by machines. Though it is still likely to be useful for leisure and personal connection purposes. 1

How does telerobotics relate to the metaverse and VR?

In the short run, the race to develop metaverses will stimulate investment in enabling technologies. This is because of the large areas of technological overlap between telerobotics and VR-type technologies (animation, simulation, and computer vision are all used by robotics to some degree, and telerobotics itself will rely on great VR). Medium term, there may be a desire to allow the merging of the physical world and metaverse(s) in specific contexts, in which case there would be a direct case for developing robotics in the context of a broader metaverse effort.

What are some wider social and economic considerations around telepresence?

Mobility of labour - with telepresence robotics, in theory, the wages for physical labour will be pushed towards equalisation around the world. Some places may try to regulate this to protect local jobs, however this may be difficult to police, and there would be pressure from projects that could vastly benefit from the technology.

Wealthy countries where capital is cheap will be able to afford to buy telerobots and will leverage labour from jurisdictions where it is cheaper. So it may discourage the development of physical infrastructure in low-wage jurisdictions if workers can telecommute to high-wage areas.

Another lens through which to view the impact of telepresence is that of Stratechery's framework on the dichotomy between the physical and virtual worlds:

...in our world the benevolent monopolist is the reality of atoms. Sure, we can construct borders and private clubs, just as the Metaverse has private property, but interoperability and a shared economy are inescapable in the real world; physical constraints are community.

From one perspective, telerobotics helps the real world to acquire some of the advantages of the digital world, such as location-independence and access control. However, the current limitations of the real world act act as positives in the way that they prevent it from becoming distorted by economic incentives towards proprietary standards and gatekeepers. If you can simply turn off someone's robot access when they deviate from the norms in your telerobot city, your agency is at the mercy of the entity in charge of the telerobotic equipment, in the same way that in the digital economy users are beholden to platform owners today.

Telepresence doesn't bring the scale inherent in many digital systems - we'll have to wait for autonomous robots for that. However, it enables the reading and writing of atoms over the network. This is something truly novel and a huge capability unlock for the internet, which thus far has mostly been limited to the domain of information and relied on poor proxies to the physical world (low-wage workers in-person, or only being able to organise systems rather than control them).

What are the functional requirements for telepresence technology?

There are 3 key components: the robot side, the user side, and the connection. We can break down each of these components into core functional areas.

The robot requires

Some way to move around (locomotion)

The ability to affect its environment (manipulation)

Sensors to perceive the world for the human (perception)

The human interface requires

Some way of providing sensory information for the human (display)

The ability for the human to communicate their intents about what the robot should do (input)

The connection requires

High bandwidth & low latency

Portable to allow freedom of movement in areas the robot will operate in

What current technologies exist to fulfil the listed needs? What needs more R&D?

On the locomotion front, legged robotics has made huge strides in recent years, with 4 legged locomotion basically being a solved problem using learning methods. It can be produced on a deterministic schedule using off-the-shelf software. And systems for bipedal walking are improving quickly, with products such as Digit reducing the cost to the order of hundreds of thousands per unit rather than millions (and in the realm of quadrupedal robots, you can even buy a small robot dog from Xiaomi for US$1500). Unitree is planning to release a humanoid for $90k(!) soon, and presumably at some point, the Tesla, Figure, and 1X offerings will roll out. The reliability for such systems would need to be high to produce more than a quick demo; this is unknown, or known to be bad, on many current systems.

For manipulation, progress in controls will allow the usage of more complex hardware than traditional 2-finger robotic grippers. For example, learned task-space style control can allow low-level robot motion to be generated from only inputs of the trajectories of objects to be manipulated. This could help combat latency and may make high-fidelity haptic user feedback less necessary for complex manipulations. Real-time hand tracking could be leveraged in tandem for input; combining both explicit hand pose reconstruction and task space control could help improve speed (which isn’t great with grippers).

Hardware for anthropomorphic robotic hands remains an open question. It is advancing quickly with both efforts to make more robust versions of products like the Allegro hand, as well as other startups (eg Clone robotics) trying to make much more anthropomorphic hands that are purportedly much stronger.

With regards to sensing on the robot, from a controls perspective for both manipulation and locomotion it seems RGBD input will provide most of what you need. Tactile sensors on the hands would also be ideal for manipulating soft objects; fortunately these are currently coming down dramatically in cost.

On the human interface side, many challenges are shared with Virtual reality: display size and FoV, convenience of the hardware, and how to provide tactile feedback. But there are a couple of key differences. So the display tech will likely be able to piggyback on increasingly good AR and VR tech from, for example, Apple and Meta. In telerobotics you are controlling the robot remotely, so in contrast to VR's rendered worlds, you can't get near real-time feedback to the user. Thus it seems likely that some degree of prediction of images, or reconstructing the world in a simulator, predicting forward, and rendering it live, will be needed to combat this.

The fact that the telerobot is interacting with the physical environment means that tactile feedback is likely important. There are a few proven ways of doing this, for example, HaptX's system which relies on directly applying force on the user's hands, and Facebook's wrist-based system. But many of these technologies are in relatively early development (the former requires you to wear a backpack!), so it's unclear what the right set of tradeoffs is - do you scrap haptics entirely and do manipulation through more gesture type interfaces with no tactile feedback, trusting the robot's own control system? Or is high-fidelity feedback essential for communicating user intent?

For connection hardware, it seems piggybacking off either 5G or some of the newer LEO satellite platforms like Starlink if you needed the robots to go into areas with poor service would be the way to go. There is the question of figuring out what level of bandwidth and realism you need for your application. It seems the ceiling is quite high here based on what I’ve heard about Starline, the most realistic telepresence system to date.

With regards to what to work on out of these areas, I agree with Ben Reinhardt's take that a systems approach is needed, thus the impact of working on any individual area in isolation is limited. Most of the components exist but can only be built properly in their final form when accounting for the whole system.

The adjacent possible

Developing telerobotics technology will have several auxiliary benefits:

Bootstrapping autonomous robots - If telerobotics reaches scale it will dramatically reduce the cost and improve the hardware capabilities of robots. If scaled up enough, data on a large variety of manipulation tasks will be collected. These two factors combined are likely to allow gradual automation of many repetitive tasks in software; likely starting with "Tesla Autopilot" like assistive features before scaling up to full manipulation tasks.

A "killer app" for VR? - As telepresence requires a VR experience to be truly immersive, if it becomes widely adopted (for example as a proxy for travel) it will pull VR along with it. This is likely to cause a much larger shift towards VR technologies once individuals have access to and are used to these systems.

Any good resources for further reading?

Marvin Minsky’s Telepresence Manifesto (1980s)

Ben Reinhardt's "Bottlenecks in Telerobotics"

Venkatesh Rao articulates a good case for biomorphic robots here, which I agree is a key aspect of making telerobotics actually general-purpose

CWT with Balaj Srinivasan has a short segment on the topic

There was an XPRIZE for telerobotics in the recent past.

If you have suggestions for more items for me to include on this FAQ or are interested in discussing these topics further, get in touch.

This post takes stylistic inspiration from the various longevity and climate FAQs which others have written.

My personal probability of near term AGI remains relatively low, hence my motiavtion to write this post at all. But YMMV on this one…